10 Most Popular ML Algorithms For Beginners-2024

Are you curious about machine learning but unsure where to start? You’re not alone! With so many algorithms and techniques, diving into the world of ML Algorithms can seem confusing. That’s why we’ve put together this guide on the 10 most popular machine learning algorithms for beginners.

Whether you’re a student, a professional looking to upskill, or just someone with a keen interest in AI, this article will introduce you to the foundational algorithms in a simple way.

We’ll break down each algorithm, explaining how it works and why it’s important, without getting too technical. From decision trees and linear regression to k-nearest neighbors and support vector machines, you’ll get a clear picture of the basics. By the end of this guide, you’ll have a solid understanding of these key algorithms and be well on your way to exploring the fascinating world of machine learning. So, let’s get started and unlock the power of ML Algorithms together!

What Is ML Algorithms

Machine learning ML algorithms are sets of rules and techniques that computers use to learn from data and make predictions or decisions without being explicitly programmed to do so. Instead of following hard-coded instructions, these algorithms identify patterns and relationships within the data, allowing the computer to improve its performance over time.

In simple terms, think of ML algorithms as a recipe. Just as a recipe provides steps to turn raw ingredients into a finished dish, ML algorithms provide steps for the computer to process raw data and produce useful results, like predicting future trends or recognizing images.

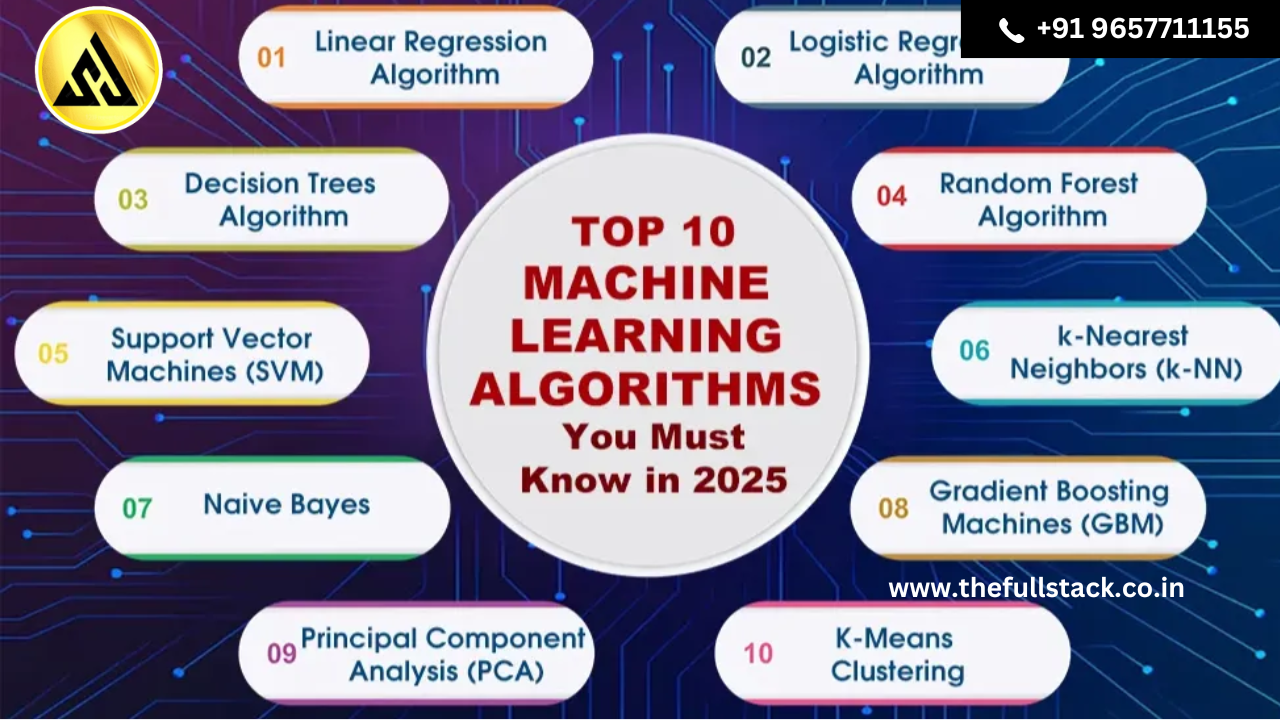

What Are The Top 10 Most Popular ML Algorithms

The Top 10 most popular ML algorithms that every beginner should know are given below, we will explain each of these algorithms which will help you to understand the topic better.

| Top 10 Most Popular ML Algorithms |

|

Types Of Machine Learning Techniques

There are basically three types of machine learning techniques that are commonly used across different industries. Let’s dive into each technique in detail which will help you to understand the concept in a better way.

1. Supervised Learning

Supervised learning is a type of machine learning where the model is trained on labeled data, which means the input data is paired with the correct output. It’s like teaching a computer by giving examples.

You provide the computer with a bunch of examples where you already know the answers, and the computer learns from these examples to make predictions or decisions about new data it hasn’t seen before.

Some of the common supervised learning ML algorithms include-

- Linear Regression: Linear regression finds the relationship between input variables and a continuous output by fitting a straight line to the data.

- Logistic Regression: Logistic regression predicts the probability of a binary outcome (yes/no, true/false) based on one or more input variables.

- Decision Trees: Decision trees divide the data into smaller groups based on input features, using a series of if-else conditions.

- Random Forest: Random forest is an upgraded version of decision trees that combine multiple trees to improve prediction accuracy.

2. Unsupervised Learning

Unsupervised learning is a type of machine learning where the model is trained on unlabeled data, meaning there are no predefined categories or outputs. It’s like organizing your closet without labels.

You have a pile of clothes, but you don’t tell the computer what each item is. Instead, the computer has to find patterns and similarities in the data on their own.

Some of the commonly used unsupervised ML algorithms include-

- K-means clustering: K-means clustering partitions data into clusters based on similarity, aiming to minimize the distance between data points within the same cluster.

- Hierarchical Clustering: Hierarchical clustering creates a tree-like structure of clusters where each data point belongs to a single cluster, based on their similarity.

- Principal Component Analysis (PCA): PCA reduces the dimensionality of a dataset by transforming it into a new coordinate system to capture the most important information.

3. Reinforcement Learning

Reinforcement learning is a type of machine learning where an agent learns to make decisions by taking actions in an environment to achieve a specific goal. The agent receives feedback in the form of rewards or punishments based on its actions.

It’s like training a pet. You provide the pet with rewards when it performs a desired action and punish it when it does something wrong. Over time, the pet learns which actions lead to rewards and which ones lead to punishment.

Some of the most commonly used reinforcement ML algorithms include-

- Q-learning: Q-learning is a method for agents to learn how to make decisions by maximizing the expected reward in a given environment.

- Deep Q-Networks (DQN): DQN is a reinforcement learning technique that uses a neural network to approximate the optimal action-value function.

- Policy Gradient Methods: Policy gradient methods directly optimize the policy function, which determines the agent’s action selection strategy, by maximizing the expected cumulative reward.

10 Most Popular ML Algorithms

Let us learn more about the popular ML algorithms that we have discussed above, understanding each one in detail and getting familiar with the topic.

1. Linear Regression

Linear regression is a fundamental statistical method used for predicting the value of a dependent variable based on the value of one or more independent variables. Linear regression finds the linear relationship between variables by fitting a linear equation to observed data.

- Dependent Variable (Y): The variable you want to predict or explain.

- Independent Variable (X): The variable you use to make predictions.

The relationship between the dependent variable Y and the independent variable X is modeled by the linear equation:

Y= a *X + b

Y – Dependent Variable

a – Slope

X – Independent variable

b – Intercept

The coefficients a & b in this equation are derived by minimizing the sum of the squared difference of distance between data points and the regression line.

2. Logistic Regression

Logistic regression is a supervised learning algorithm used for binary classification tasks. Unlike linear regression, which predicts a continuous value, logistic regression predicts the probability that a given input belongs to a certain class. It uses the logistic function to map predicted values to probabilities.

The logistic function outputs a value between 0 and 1, which represents the probability of the input belonging to the positive class. It’s simple, efficient, and often used for problems such as spam detection, disease prediction, and binary classification tasks.

3. Decision Tree

A decision tree is a supervised learning algorithm used for both classification and regression tasks. It works by recursively splitting the data into subsets based on the value of an input feature, creating a tree-like model of decisions. Each internal node represents a feature, each branch represents a decision rule, and each leaf node represents an outcome.

The process of splitting the data continues until the algorithm creates branches that represent decisions with the most homogeneity possible. Decision trees are easy to interpret and visualize, but they can be prone to overfitting if not pruned properly. They are useful in applications like risk assessment and predictive modeling.

4. SVM (Support Vector Machine) Algorithm

The Support Vector Machine (SVM) algorithm is a powerful supervised learning model used for classification and regression tasks. It works by finding the hyperplane that best separates data points of different classes in a high-dimensional space.

The hyperplane is chosen to maximize the margin between the classes, which is the distance between the closest points of each class. If the data is not linearly separable, SVM can use kernel functions to transform the data into a higher-dimensional space where a hyperplane can be used to separate the classes. SVMs are effective in high-dimensional spaces and are widely used in fields such as bioinformatics, image recognition, and text classification.

5. Naive Bayes Algorithm

Naive Bayes is a probabilistic classification algorithm based on Bayes’ Theorem, which describes the probability of an event based on prior knowledge of conditions related to the event. The algorithm is named as “naive” because it assumes that all features in the dataset are independent of each other, which is rarely true in real-world scenarios.

Despite this simplification, Naive Bayes performs well in many applications, particularly those involving text data, such as spam filtering, sentiment analysis, and document classification. It calculates the posterior probability for each class and predicts the class with the highest probability.

6. K-Nearest Neighbors (KNN) Algorithm

K-Nearest Neighbors (KNN) is a simple, instance-based learning algorithm used for classification and regression tasks. It operates by finding the ‘k’ closest data points to a given input in the feature space and making predictions based on the majority class among the neighbors or averaging the values for regression.

KNN is non-parametric and does not make any assumptions about the underlying data distribution. It is easy to implement and understand but can be computationally expensive, especially with large datasets. KNN is commonly used in pattern recognition, recommendation systems, and image analysis.

7. K-Means

K-Means is an unsupervised clustering algorithm that partitions a dataset into ‘k’ distinct clusters. It works by randomly initializing ‘k’ cluster centroids and then iteratively refining them. Each data point is assigned to the nearest centroid, and the centroids are recalculated as the mean of all points in the cluster.

This process repeats until the centroids no longer change significantly. K-Means is simple and fast, making it suitable for large datasets. However, it requires specifying the number of clusters in advance. It is used in market segmentation, image compression, and anomaly detection.

8. Random Forest Algorithm

Random Forest is an ensemble learning algorithm that combines multiple decision trees to improve the performance and robustness of the model. Each tree in the forest is trained on a random subset of the data and a random subset of the features, which introduces diversity among the trees.

The final prediction is made by averaging the predictions or taking the majority vote from all the trees. This approach reduces the risk of overfitting and improves generalization. Random Forests are powerful and versatile, used in applications such as credit scoring, medical diagnosis, and stock market prediction.

9. Dimensionality Reduction Algorithms

Dimensionality reduction algorithms aim to reduce the number of input features in a dataset while retaining as much information as possible. These algorithms help in reducing computational complexity and improving model performance.

10. Gradient Boosting Algorithm

Gradient Boosting is a learning technique that build a strong model by combining the predictions of several weak learners (basically decision trees).

Gradient Boosting builds models sequentially, with each new model correcting the errors of the previous ones. This method is highly effective for various machine learning tasks, offering high accuracy and flexibility.

How Learning ML Algorithms Helps Business

1. Logistic Regression

Logistic regression helps businesses predict outcomes like whether a customer will buy a product or not. By analyzing past data, it calculates the probability of an event happening. This makes it a valuable tool for marketing and financial risk assessment.

2. Decision Trees

Decision trees make complex decisions easier by breaking them down into simple yes/no questions. They can help in assisting doctors in diagnosing medical conditions based on patient symptoms. This improves efficiency and accuracy in decision-making.

3. Support Vector Machines (SVM)

SVMs are great for classifying data into different categories. Businesses use them for tasks like image recognition and text classification (filtering out spam emails). By drawing clear boundaries between different types of data, SVMs help improve accuracy in various applications.

4. Naive Bayes

Naive Bayes is a simple yet powerful ML algorithms for classification tasks. It’s widely used for spam filtering, where it learns to identify spam emails by analyzing word patterns. It’s also used in sentiment analysis to understand customer opinions from reviews or feedback, helping businesses improve their products and services.

5. K-Nearest Neighbors (KNN)

KNN works by comparing new data points to the most similar existing data points. It’s used in recommendation systems to suggest products based on what similar customers have bought. It’s also useful in fraud detection, identifying suspicious transactions by comparing them to the legitimate ones.

6. Random Forest

Random forest improves decision-making by combining multiple decision trees. It’s used in risk management to predict financial risks by analyzing historical data.

7. Dimensionality Reduction Algorithms

Dimensionality reduction simplifies large datasets by reducing the number of features while maintaining important information. This makes data easier to visualize and interpret, helping in easy decision-making.

8. Gradient Boosting

Gradient boosting helps in building strong predictive models by sequentially improving on previous model errors. It is majorly used in sales forecasting to predict future trends, helping businesses manage inventory and plan marketing campaigns.

So what are you waiting for? Enroll now and start your future in data science with us!

ML Algorithms FAQs

How do machine learning algorithms work?

Machine learning algorithms generally work by analyzing data, identifying relevant patterns, and creating models based on patterns that can make predictions on new, unseen data. They learn from experience, adjusting their parameters to minimize errors and improve accuracy.

What are the types of machine learning algorithms?

The main types of machine learning algorithms are supervised learning, unsupervised learning, and reinforcement learning.

What is the difference between supervised and unsupervised learning algorithms?

Supervised learning algorithms learn from labeled data, where the correct outputs are provided. They predict outcomes based on input features. Unsupervised learning algorithms, on the other hand, work with unlabeled data and learn patterns and structures without any prior guidance.

you may be interested in this blog here:-

Advanced OOP Concepts in SAP ABAP A Comprehensive Guide

Leave a Reply